In recent years, the demand for real-time, data-driven applications has surged, leading to an increased focus on Retrieval-Augmented Generation (RAG) in AI. Building RAG-based applications allows businesses to harness vast amounts of data and provide users with highly relevant, contextually accurate responses in real-time. This approach combines retrieval mechanisms, which pull relevant data from a predefined source, with generation models that tailor responses based on the specific input and context. A 2023 study revealed that 36.2% of enterprise large language model (LLM) use cases incorporated RAG techniques. As a result, RAG applications can deliver more accurate, up-to-date, and efficient outputs, making them a valuable asset across industries from customer service to healthcare and beyond.

The applications of RAG in AI are incredibly diverse, enabling businesses to innovate in areas like intelligent virtual assistants, personalized recommendations, and automated content creation. RAG-based solutions can significantly enhance user experience by providing information-rich, context-aware interactions that go beyond the traditional capabilities of generative AI models.

In this blog, we will explore the fundamentals of RAG app development, including the tools, processes, and best practices for creating effective RAG applications, as well as real-world use cases that demonstrate its transformative impact across various sectors.

The Basics of Retrieval Augmented Generation

AI Retrieval-Augmented Generation (RAG) is a technique that amplifies the abilities of large language models (LLMs) by incorporating advanced information retrieval processes. Essentially, RAG systems locate relevant documents or data segments in response to a specific query, using this sourced information to produce highly accurate and contextually relevant answers. By grounding responses in actual, external data, this approach notably reduces the occurrence of hallucinations—where models generate plausible but inaccurate or nonsensical content.

Key concepts in AI RAG include:

- Retrieval Component: This part of the AI retrieval augmented generation shifts through a knowledge base to identify pertinent information that can inform the model’s answer.

- Generation Component: With the retrieved information, the language model constructs a response that is both coherent and contextually meaningful.

- Knowledge Base: A structured repository of information from which the retrieval component extracts data.

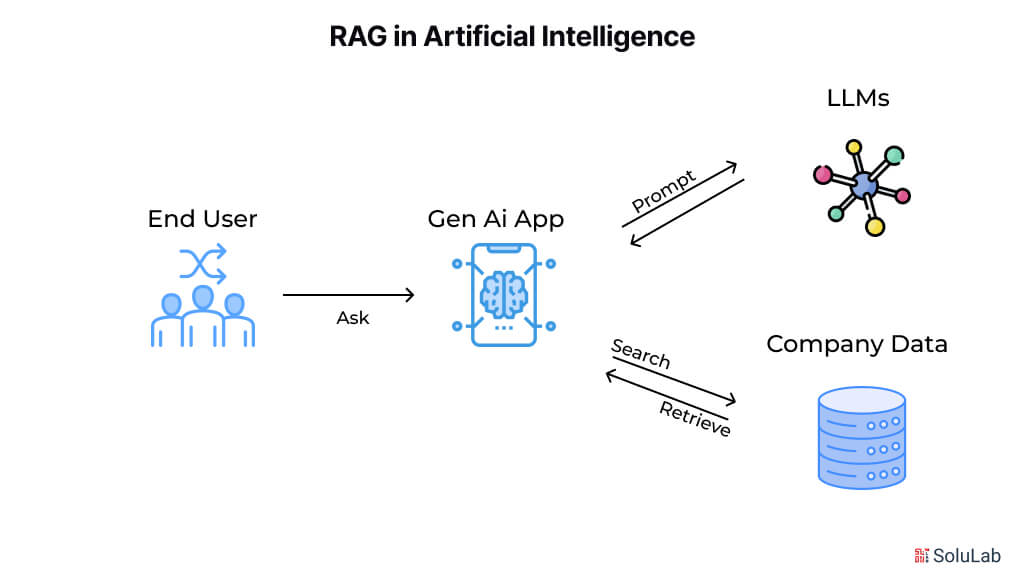

What is RAG in Artificial Intelligence?

Retrieval-augmented generation (RAG) in AI is a sophisticated method that enhances the accuracy and contextual relevance of AI responses by combining data retrieval with language generation capabilities. Unlike traditional models that depend solely on pre-trained knowledge, RAG systems actively pull information from external sources or databases, allowing for responses that are grounded in real-world, up-to-date data. This approach not only improves response reliability but also expands the model’s capacity to handle a broader range of queries with accuracy.

The agentic RAG framework consists of two main components, each playing a critical role in the process:

- Retrieval Component: This component searches a designated knowledge base or document repository to identify data directly related to the query. By locating relevant content, the retrieval component provides foundational information, reducing the risk of hallucinations where models produce inaccurate or nonsensical responses.

- Generation Component: Using the information fetched by the retrieval component, the generation component crafts a response that is coherent, contextually appropriate, and informed by actual data. This ensures that the output is both relevant and accurate, significantly enhancing the model’s reliability.

- Knowledge Base: RAG systems rely on a structured knowledge repository where information is stored for retrieval. This database can include articles, documents, or other sources that the retrieval component accesses to fetch relevant information, keeping the responses grounded in real knowledge.

RAG in AI offers a robust solution for applications across industries, from customer support to healthcare, where accurate and fact-based AI responses are critical. By pairing retrieval with generation, RAG systems are well-equipped to provide contextually accurate, trustworthy responses that set a new standard in artificial intelligence applications.

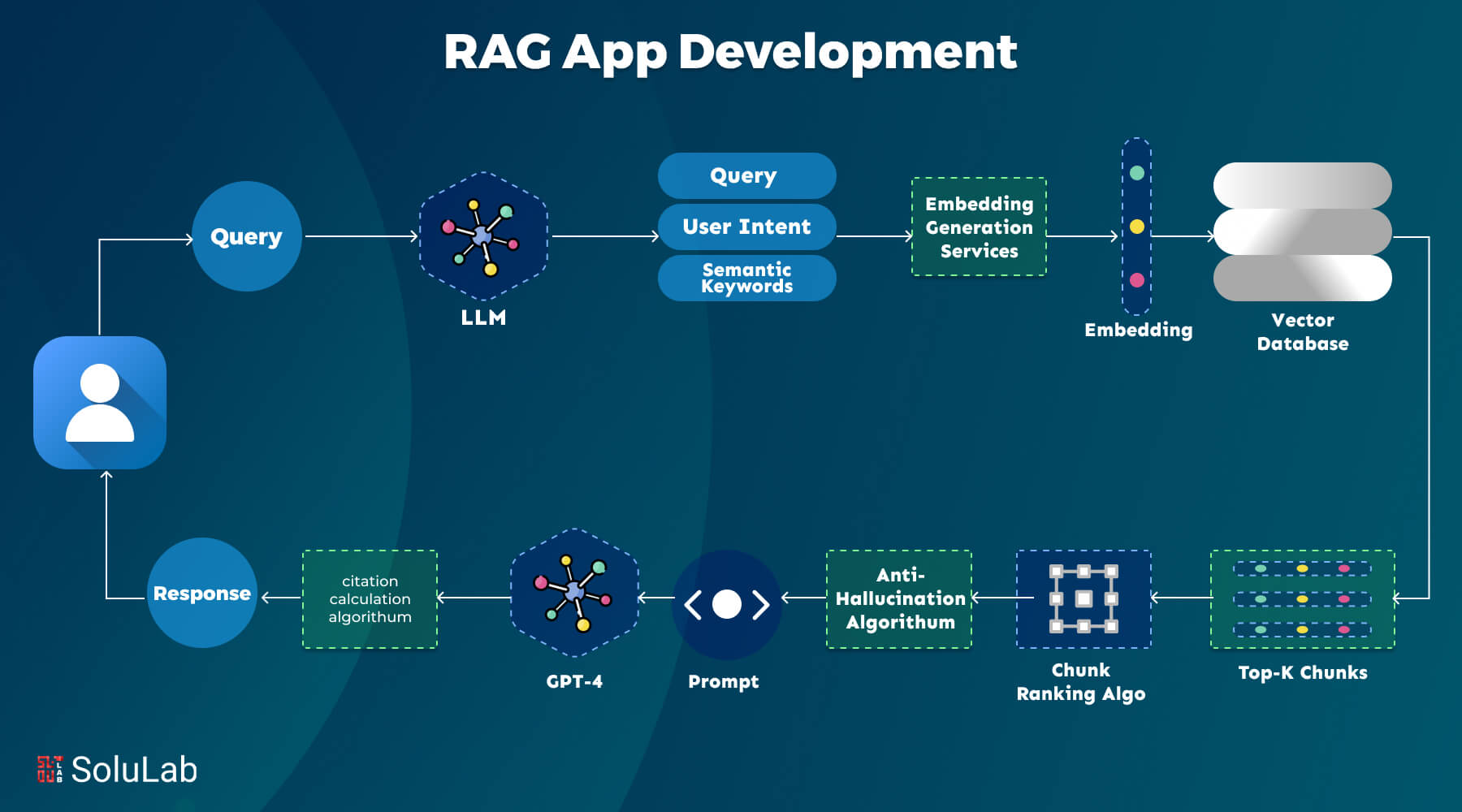

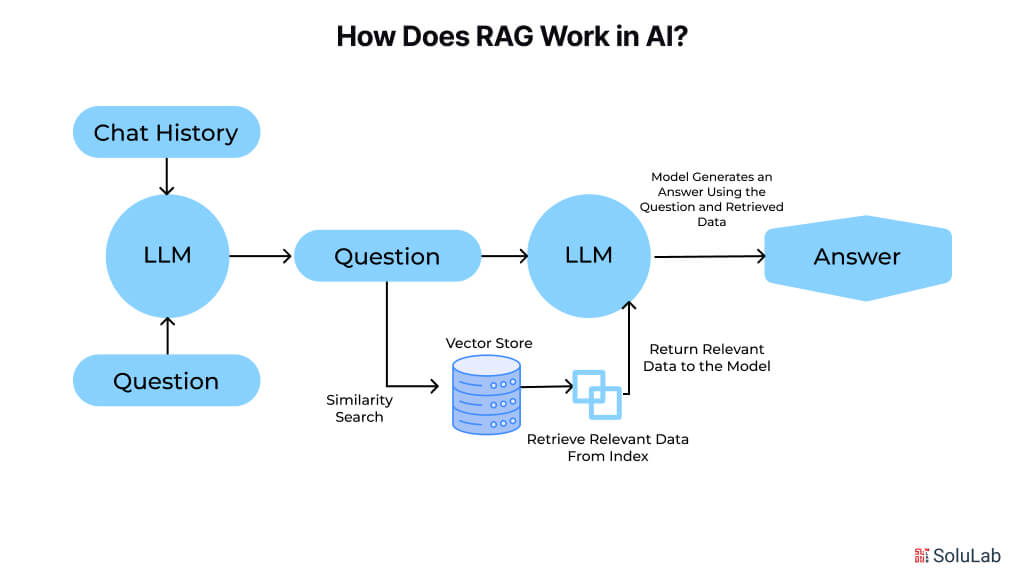

How Does RAG Work in AI?

How RAG works in AI involves a unique combination of retrieval and generation mechanisms that enable AI models to provide accurate, contextually relevant responses grounded in real data. This approach is particularly useful for complex applications requiring reliable information, as it combines the data-fetching power of a retrieval system with the language generation abilities of an AI model.

Here’s an overview of the main steps in building RAG-based applications:

1. Query Processing: When a user inputs a query, the RAG system first processes this input to determine what type of information is needed. The query is analyzed to identify keywords and context, preparing it for the retrieval stage.

2. Retrieval of Relevant Data: The retrieval component of the RAG system scans a designated knowledge base or document repository to locate the most relevant information based on the query. This component searches through databases, articles, or other structured data to identify specific content that aligns closely with the input query.

3. Contextual Response Generation: Once relevant data is retrieved, the generation component of the system takes over. The AI model then generates a response by synthesizing the retrieved information with its pre-trained knowledge. This response is coherent, contextually aligned, and informed by actual data, significantly reducing the likelihood of generating incorrect or hallucinated responses.

4. Output Delivery: Finally, the model delivers an answer that is both accurate and relevant, improving user experience and meeting the demands of more information-intensive queries. The response is directly informed by real data, enhancing the credibility and reliability of the output.

RAG in AI applications is transforming various industries, from customer support to healthcare and education. By grounding responses in real-time data, RAG models deliver more precise answers and help organizations build intelligent systems that can handle complex, data-driven inquiries with ease. This approach, when aligned with responsible AI principles, ensures that applications not only provide up-to-date and factually accurate answers but also adhere to ethical standards and fairness. This makes RAG in AI a critical advancement in artificial intelligence, empowering organizations to address intricate challenges responsibly.

RAG Applications in AI

Retrieval-augmented generation (RAG) represents a paradigm shift in how artificial intelligence applications operate, particularly when utilizing large language models (LLMs). RAG in AI applications combines a generation model with a retrieval system to answer questions more accurately, provide comprehensive responses, and enhance the reliability of information. This approach is particularly valuable in situations where a purely generative model might lack the specific information required to answer a query with precision. By integrating external knowledge sources and retrieval mechanisms, RAG-based LLM applications can deliver more accurate, contextually relevant, and up-to-date responses.

Key Applications of RAG in AI:

-

Question-Answering Systems

One of the most common uses of RAG in AI applications is in question-answering systems. Here, RAG-based models retrieve relevant documents or data points in real-time and use this information to generate precise answers. This application is particularly useful for industries with a high demand for rapid and accurate information, such as healthcare, legal services, and customer support.

-

Enhanced Chatbots and Virtual Assistants

RAG-based LLM applications are transforming the capabilities of chatbots and virtual assistants. Traditional chatbots rely heavily on pre-defined scripts or generative models, which may not always provide relevant information. By integrating RAG, these virtual assistants can access vast databases or even the internet to retrieve the latest data, thus enhancing their ability to answer queries accurately.

-

Content Creation and Summarization

Content summarization and content creation can benefit immensely from the applications of RAG in AI. For example, when tasked with generating summaries of lengthy reports or articles, RAG-based systems can retrieve core information and offer a condensed, insightful version. Similarly, when creating content, a RAG model can pull in relevant data from trusted sources, ensuring the accuracy and relevance of the information it generates.

-

Personalized Recommendations

In e-commerce, media, and social platforms, RAG models are now used to create more personalized recommendations. The retrieval component fetches information based on user behavior, preferences, or browsing history, which is then processed by the generation model to deliver tailored recommendations. This hybrid approach provides a richer, user-centered experience compared to traditional recommendation systems.

-

Real-Time Decision Support in Healthcare

In healthcare, RAG-based LLM applications assist practitioners by retrieving the latest research, patient records, or diagnostic guidelines relevant to a patient’s case. The generation model then synthesizes this information, offering insights or potential treatment options. This application can improve decision-making, offering practitioners quick access to reliable, up-to-date knowledge.

-

Financial Analysis and Forecasting

Finance professionals use applications of RAG in AI to analyze vast amounts of data quickly. RAG models retrieve recent economic indicators, market trends, or industry reports, generating analyses that support decision-making, trading strategies, and financial planning. By leveraging real-time data, RAG-based systems provide more accurate forecasts and insights than traditional predictive models.

-

Educational Tools and Intelligent Tutoring Systems

RAG-enabled educational platforms provide personalized and contextually relevant content to learners by retrieving relevant information on the fly. This can be especially useful in answering specific student queries, recommending resources, or delivering customized lessons based on each student’s learning needs.

RAG-based LLM applications represent an exciting evolution in AI, combining the generative power of LLMs with the precision of information retrieval systems. This hybrid approach addresses the limitations of traditional AI systems, delivering applications that are not only more informative and relevant but also more adaptable to real-world demands.

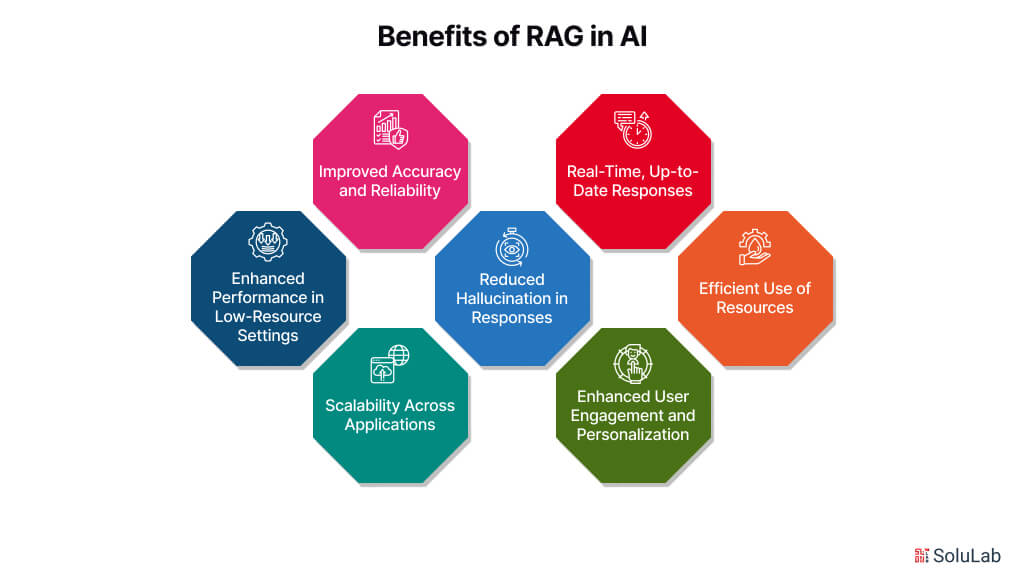

Benefits of RAG in AI

Retrieval-augmented generation (RAG) has emerged as a groundbreaking approach in the field of artificial intelligence, offering a range of benefits that make it a preferred choice for various applications. By combining retrieval mechanisms with generation capabilities, RAG models offer AI systems that are highly accurate, reliable, and contextually aware. This hybrid framework addresses some of the core limitations of traditional generative AI and enhances application performance across industries. Let’s explore the main benefits of RAG for RAG app development services and how Retrieval augmented generation development optimizes AI solutions.

Key Benefits of RAG for AI development companies include:

-

Improved Accuracy and Reliability

RAG models retrieve relevant information from extensive data sources before generating responses, resulting in higher accuracy and dependability. This is especially valuable in industries that require precise information, such as healthcare, finance, and law. RAG app development services offer businesses enhanced accuracy, reducing the risk of misinformation and making AI outputs significantly more reliable.

-

Real-Time, Up-to-Date Responses

Traditional language models are often limited by their training data and may not account for real-time information. In contrast, Retrieval augmented generation development enables AI to fetch the latest data before generating a response. This feature is essential in dynamic fields like news, stock market analysis, or technical support, where staying current is critical.

-

Efficient Use of Resources

RAG models efficiently utilize resources by only generating content when necessary and otherwise pulling relevant data directly from existing sources. This approach lowers computational costs, making RAG app development a cost-effective solution for businesses looking to deploy large-scale AI systems without exceeding budgets.

-

Enhanced User Engagement and Personalization

By drawing on user-specific data or preferences, RAG applications can deliver highly personalized experiences. For example, a virtual assistant powered by RAG app development services can use retrieval mechanisms to customize responses based on prior user interactions, improving engagement and building a more meaningful user experience.

-

Scalability Across Applications

RAG-based models are versatile and adaptable, allowing them to be easily scaled across a wide variety of applications. Whether for customer service, content generation, recommendation engines, or decision support systems, Retrieval augmented generation development makes it easier for companies to implement AI models that meet specific operational needs and expand as those needs evolve.

-

Enhanced Performance in Low-Resource Settings

RAG models can perform well even in low-resource environments by retrieving only the data required, reducing the computational load for generation. This makes RAG app development ideal for businesses or regions with limited access to advanced hardware, democratizing access to high-quality AI services.

-

Reduced Hallucination in Responses

Generative models can sometimes produce “hallucinations,” or incorrect responses based on assumptions rather than facts. The retrieval component in RAG acts as a safeguard against this, significantly reducing the likelihood of such inaccuracies. By partnering with RAG app development services, businesses can achieve higher response quality and minimize the risk of misleading outputs.

RAG app development services and Retrieval augmented generation development provide businesses with highly accurate, resource-efficient, and scalable AI solutions that adapt to various use cases. The integration of retrieval and generation in RAG models enhances AI capabilities, allowing for applications that deliver reliable, context-aware, and personalized interactions—benefits that are transforming the potential of AI across sectors.

DO YOU KNOW?

The growing importance of RAG is reflected in substantial financial investments. For instance, Contextual AI, a company specializing in RAG techniques, secured $80 million in Series A funding in August 2024 to advance its AI model enhancement capabilities.

How to Develop a RAG Application From Start to Finish?

Developing a Retrieval-Augmented Generation (RAG) application requires careful planning and implementation to fully leverage its dual capabilities in data retrieval and generation. Here’s a step-by-step guide to creating a RAG application, from initial setup to deployment.

1. Setting Up Your Development Environment

The first step involves setting up the tools and frameworks you’ll need. Start by choosing a machine learning framework like PyTorch or TensorFlow and install relevant libraries for data handling, processing, and natural language processing (NLP). For RAG, you’ll likely need libraries such as Hugging Face Transformers for large language models (LLMs) and FAISS or Elasticsearch for efficient data retrieval. It’s also essential to set up a Python virtual environment to manage dependencies, especially for RAG applications that require various NLP and data management libraries.

- Key Tools: PyTorch/TensorFlow, Hugging Face Transformers, FAISS/Elasticsearch, Python virtual environment

2. Data Preparation

RAG models rely heavily on high-quality data for both retrieval and generation components. Data preparation is essential to ensure that your application has the right information at its disposal. Here’s what to consider:

- Collect and Clean Data: Gather data relevant to the use case, such as documents, web pages, or product descriptions. Clean the data to remove any unnecessary information, standardize formats, and handle any inconsistencies.

- Create Indexes for Retrieval: Structure the data in a way that allows it to be indexed and retrieved quickly. Use a tool like FAISS or Elasticsearch to create indexes of the data, making retrieval more efficient for large datasets.

- Tokenize Data: Convert the text data into a format compatible with the language model. Tokenization splits the text into smaller pieces, allowing for easy processing by the model.

- Output: A clean, indexed, and tokenized dataset ready for the retrieval component.

3. Building the Retrieval Component

The retrieval component is a core part of any RAG application. It fetches relevant information from the dataset based on the input query, enabling the generation model to produce more contextually accurate responses.

- Implement a Vector Store: Use vector embedding to store the data in a way that makes it easily searchable by context. This involves creating vector representations of the text using pre-trained models, such as BERT or Sentence Transformers.

- Configure the Search System: Set up FAISS or Elasticsearch to search the vectorized data. Implement search algorithms to retrieve the top ‘n’ documents that match the query.

- Optimize for Speed: Retrieval speed is critical for responsive applications, so ensure that the indexing and search process is optimized for minimal latency.

- Output: A fully functional retrieval system that can fetch relevant documents or data points in response to a user query.

4. Building the Generation Component

Once the retrieval component is established, the next step to build private LLM is to develop the generation component, which synthesizes the retrieved data into coherent and contextually relevant responses.

- Select a Language Model: Choose a large language model (LLM) like GPT-3, GPT-4, or T5, which is well-suited for generating natural language responses. Load this model using frameworks like Hugging Face Transformers.

- Fine-tune (if needed): For applications requiring a specific tone or knowledge base, consider fine-tuning the model on domain-specific data to improve relevance and response accuracy.

- Integrate with the Retrieval System: Implement a mechanism that passes the retrieved documents to the generation model. This typically involves combining the retrieved content with the user’s query as input for the LLM.

- Output: A generative model capable of producing context-aware, accurate responses based on the data retrieved by the retrieval component.

5. Evaluation and Testing

Testing and evaluation are crucial to ensuring the application’s accuracy, responsiveness, and reliability. Focus on evaluating both the retrieval and generation components.

- Quality of Retrieval: Measure the relevance of the retrieved documents to the input query. Use metrics like Mean Reciprocal Rank (MRR) or Precision at K to assess retrieval quality.

- Generation Quality: Evaluate the quality of the generated responses by assessing coherence, relevance, and factual accuracy. Metrics like ROUGE and BLEU can be useful, alongside human evaluation.

- End-to-End Testing: Test the entire system to ensure that retrieval and generation work well together, offering seamless and relevant responses.

- Output: A tested, fine-tuned application ready for deployment.

6. Deployment and Scalability

Deploying an RAG application effectively requires selecting an environment that supports scalability, as user demand can vary widely.

- Choose a Deployment Platform: Deploy the application on a cloud platform like AWS, Azure, or Google Cloud, which offers services to handle both computation and storage needs.

- Implement Load Balancing and Auto-Scaling: Use load balancers and auto-scaling to ensure the application can handle varying levels of traffic. This is particularly crucial for RAG applications, where retrieval and generation both require significant computational resources.

- Optimize for Cost and Latency: Consider using caching strategies to reduce repeated retrieval queries, minimize costs, and reduce latency in generating responses.

- Output: A deployed RAG application capable of handling real-world traffic with an infrastructure that supports scalability.

By following this structured approach, you can develop an RAG application that is reliable, scalable, and efficient from start to finish. From setting up the environment and preparing data to deploy the final product, these steps ensure a robust RAG application that maximizes the benefits of retrieval-augmented generation in AI.

The Bottom Line

In conclusion, RAG app development is transforming AI technology by combining the strengths of retrieval and generation to produce more accurate, contextually relevant, and up-to-date responses. Through a structured approach, from setting up the development environment to deploying and scaling the application, RAG empowers AI systems across industries such as healthcare, finance, customer service, and education. This technology addresses some of the major limitations of traditional generative models by enabling them to access and use external knowledge bases, improving accuracy, real-time relevance, and enhanced user experience. The versatility of RAG in AI applications means that businesses can create tailored solutions that align closely with specific operational needs while maintaining scalability and reliability.

As an AI development company, SoluLab specializes in providing RAG app development services to clients seeking advanced AI solutions. With our team of experts in machine learning, natural language processing, and RAG technology, we support businesses in crafting custom RAG applications that integrate seamlessly with existing workflows and maximize the benefits of retrieval-augmented generation. Whether you are looking to enhance customer engagement, improve decision-making, or deploy intelligent virtual assistants, hire AI developers from SoluLab that can help bring your vision to life with powerful, scalable RAG solutions. Contact us today to explore how our RAG app development expertise can elevate your AI projects!

FAQs

1. What is Retrieval-Augmented Generation (RAG) in AI applications?

Retrieval-augmented generation (RAG) is an AI approach that combines retrieval and generation mechanisms to enhance the accuracy and relevance of generated responses. In RAG-based models, an information retrieval component first fetches relevant data from an external knowledge source, which is then used by the generation model (such as an LLM) to produce responses. This makes RAG particularly useful for applications requiring up-to-date information or precise answers based on a large dataset.

2. How does RAG app development differ from traditional AI development?

RAG app development differs from traditional AI development by integrating a retrieval mechanism that dynamically pulls relevant information in real-time. Unlike traditional generative models, which rely solely on pre-existing data in their training, RAG applications use both a retrieval component for data access and a generation component for creating responses. This hybrid approach allows RAG apps to generate more accurate, contextually relevant, and current responses compared to purely generative AI models.

3. What are the main benefits of using RAG in AI applications?

Using RAG in AI applications offers multiple benefits, including improved accuracy and reliability, real-time responses, and reduced risk of misinformation. RAG models are particularly suited for applications like customer service, virtual assistants, and recommendation systems, where accurate, context-aware responses are crucial. Additionally, RAG helps optimize computational resources by only generating responses when needed, making it a cost-effective choice for scaling AI applications.

4. What industries can benefit most from RAG app development services?

Industries with a high demand for precise, up-to-date information can benefit greatly from RAG app development services. These include healthcare (for providing medical information), finance (for real-time market analysis), customer service (for personalized support), and education (for on-demand tutoring). By enhancing user engagement and accuracy, RAG applications are valuable in any sector that requires tailored responses based on dynamic information.

5. How can SoluLab help with RAG app development?

As an AI development company, SoluLab specializes in RAG app development services, providing end-to-end support for businesses looking to create AI solutions that leverage Retrieval-Augmented Generation. SoluLab’s team of experts handles every stage, from setting up the development environment to deployment and scalability, ensuring that each RAG application is customized to meet specific business needs. Contact us to learn how our RAG expertise can help bring your AI projects to life!