In the ever-expanding digital landscape, the importance of robust cybersecurity measures cannot be overstated. With cyber threats becoming increasingly sophisticated, the need for intelligent, adaptable defense mechanisms has grown exponentially. Artificial Intelligence (AI) has emerged as a game-changer in this realm, revolutionizing the way we protect our digital assets.

This blog delves into the captivating journey of AI in cybersecurity, tracing its evolution from the rudimentary rule-based systems to the cutting-edge realm of deep learning.

Rule-Based Systems in Cybersecurity

Before AI made its presence felt, cybersecurity heavily relied on rule-based systems. These systems operated on predefined sets of rules and signatures. They were effective to some extent in thwarting known threats but had glaring limitations. Rule-based systems struggled with zero-day attacks and evolved threats that didn’t fit neatly into predetermined patterns.

For example, an antivirus software employing rule-based systems could detect and quarantine a virus only if it matched a predefined signature. If a new strain of malware emerged, the system remained blind to it until a new rule was created. This reactive approach left systems vulnerable during the crucial time gap between a new threat’s emergence and the update of the security rules.

Machine Learning in Cybersecurity

Machine learning marked the first significant leap in AI cybersecurity. Unlike rule-based systems, machine learning systems/algorithms could learn from data. They analyzed patterns, anomalies, and behaviors to detect threats, even those with no predefined rules. This proactive approach opened new possibilities in the battle against cyber adversaries.

Supervised learning, a branch of machine learning, allowed security systems to be trained on labeled datasets. By learning from historical data, these systems could make informed decisions about the nature of incoming data and identify potential threats. For instance, a supervised learning model could identify known phishing emails by recognizing common characteristics shared among them.

Unsupervised learning algorithms, on the other hand, didn’t rely on labeled data. They analyzed incoming data to identify anomalies or deviations from the norm. This made them effective in detecting novel threats or insider attacks, where the patterns might not be predefined.

Semi-supervised learning blended the best of both worlds, combining labeled data for known threats with unsupervised techniques to uncover new ones. This approach improved detection accuracy and reduced false positives.

Reinforcement learning, often associated with AI in gaming, found its application in cybersecurity as well. It enabled systems to adapt and learn in real-time, making them more agile in responding to evolving threats.

Emergence of Deep Learning

Deep learning, a subset of machine learning, brought about a paradigm shift in AI cyber-security. At its core were neural networks, models inspired by the human brain’s interconnected neurons. These networks could process vast amounts of data, automatically extract features, and make complex decisions.

Neural networks, with their ability to analyze unstructured data like images, texts, and network traffic, became invaluable in cybersecurity systems. They excelled in tasks such as anomaly detection, where identifying subtle deviations from normal behavior was critical. Deep learning models could recognize not only known malware but also previously unseen variants based on their underlying characteristics.

Deep learning also revolutionized the fight against phishing attacks. Neural networks could analyze email content, sender behavior, and contextual information to flag potentially malicious emails, even if they lacked familiar hallmarks of phishing attempts.

The use of deep learning in malware detection was another breakthrough. These models could identify malicious code by scrutinizing its structure and behavior, without relying on predefined signatures.

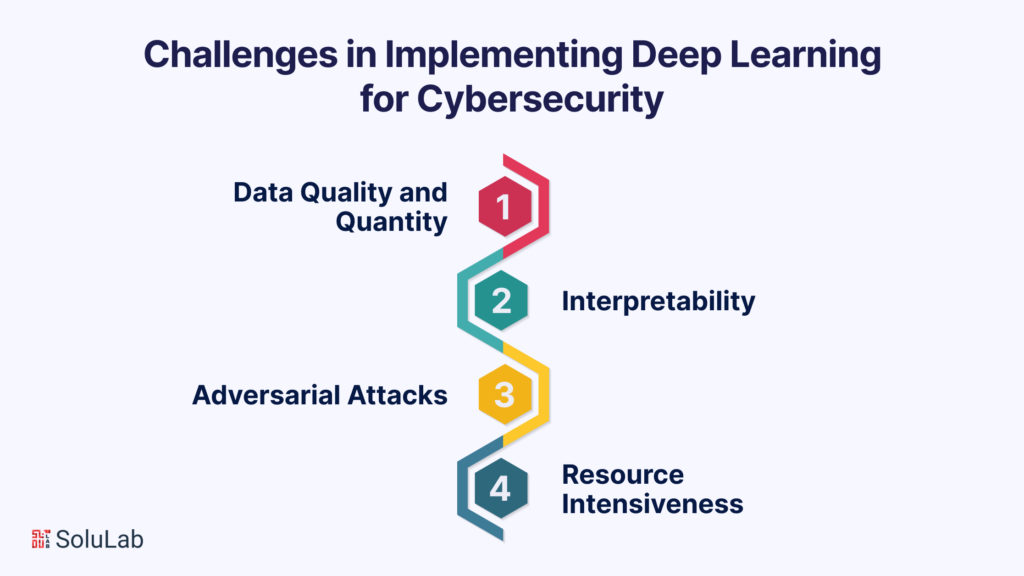

Challenges in Implementing Deep Learning for Cybersecurity

While deep learning has ushered in a new era of AI development companies, it’s not without its challenges and ethical considerations.

- Data Quality and Quantity: Deep learning models hunger for data. In AI cybersecurity services, obtaining large, high-quality labeled datasets for training can be a significant hurdle. Additionally, the fast-paced nature of cyber threats demands real-time data, making the challenge even more daunting.

- Interpretability: Deep learning models, particularly deep neural networks, are often considered “black boxes.” Their decision-making processes are complex and not easily interpretable. This opacity can be problematic when trying to understand why a model flagged a certain activity as malicious, hindering incident response and forensic analysis.

- Adversarial Attacks: Cyber adversaries are getting smarter. They can craft attacks specifically designed to bypass deep learning models. Adversarial attacks manipulate input data in subtle ways to deceive the model, making them a serious concern.

- Resource Intensiveness: Training and deploying deep learning models require substantial computational resources. This can be a roadblock for smaller organizations with limited IT infrastructure.

Ethical Concerns Surrounding AI in Cybersecurity

- Privacy: AI systems, particularly those using deep learning, can process vast amounts of personal data. The line between legitimate cybersecurity monitoring and privacy invasion can become blurry. Striking the right balance between security and privacy is crucial.

- Bias and Fairness: Deep learning models are susceptible to biases present in training data. If the data used to train these models is biased in terms of race, gender, or other attributes, the AI cybersecurity system can unintentionally discriminate against certain groups.

- Transparency: As mentioned earlier, deep learning models are often seen as black boxes. This lack of transparency can hinder accountability and make it difficult to comply with regulations that require explanations for decisions made by AI systems.

- Over-Reliance on AI: While AI can enhance cybersecurity, an over-reliance on AI systems without human oversight can lead to complacency. Cybersecurity professionals should always be in the loop to make critical decisions and understand the context.

Real-World Examples

Despite these challenges and ethical concerns, numerous organisations have embraced deep learning for cybersecurity, achieving remarkable results:

- Darktrace: Darktrace utilises unsupervised machine learning and AI to detect and respond to cyber threats in real-time. Its “Enterprise Immune System” learns and understands the unique behaviours of a network and can identify deviations indicative of attacks.

- Cylance: Acquired by BlackBerry, Cylance employs AI-driven threat detection to prevent malware and other security threats. Its approach is based on Generative AI tools trained to recognize both known and unknown threats.

- FireEye: FireEye’s Mandiant Threat Intelligence uses AI and machine learning for cybersecurity i.e. to detect and respond to cyber threats. It leverages deep learning for rapid threat detection and offers automated response capabilities.

- Google’s Chronicle: Chronicle, a subsidiary of Google, offers a cybersecurity platform that employs machine learning to help organizations analyze and detect threats in their network data.

These examples illustrate the practical application of deep learning in real-world cybersecurity scenarios. Organizations are increasingly relying on AI to bolster their defenses and respond swiftly to emerging threats.

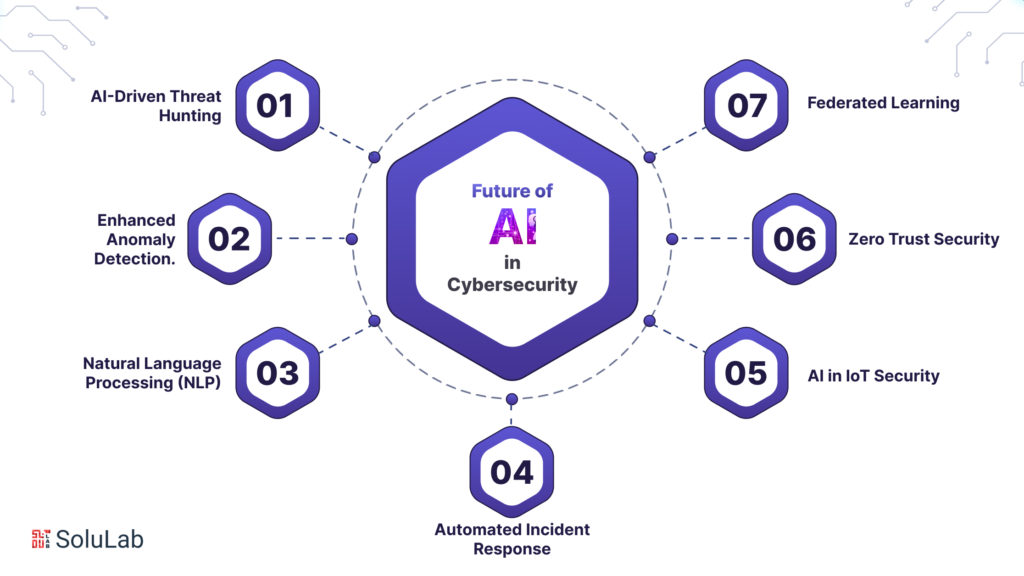

The Future of AI in Cybersecurity

As we look ahead, the future of AI in cybersecurity promises continued evolution and transformation. Several key trends and developments are shaping the landscape:

- AI-Driven Threat Hunting: AI-powered threat-hunting tools are becoming more sophisticated. They can proactively seek out and neutralize threats within a network, reducing the time it takes to detect and respond to cyberattacks.

- Enhanced Anomaly Detection: Deep learning models are continuously improving in their ability to detect subtle anomalies and deviations from normal behavior, making them invaluable for identifying sophisticated threats.

- Natural Language Processing (NLP): NLP techniques are being applied to cybersecurity to analyze text-based threats, such as phishing emails or social engineering attempts. This helps in the early detection and mitigation of such threats.

- Automated Incident Response: AI is being used to automate incident response processes. AI-driven systems can not only detect threats but also take actions to mitigate them in real time, reducing the burden on cybersecurity teams.

- AI in IoT Security: With the proliferation of Internet of Things (IoT) devices, AI is being used to secure these interconnected devices and networks. Machine learning models can detect unusual behavior or vulnerabilities in IoT ecosystems.

- Zero Trust Security: AI plays a pivotal role in implementing the zero-trust security model. It continuously verifies the identity and security posture of devices and users accessing a network, enhancing overall security.

- Federated Learning: This emerging approach allows organizations to collaborate on threat detection without sharing sensitive data. Generative AI models are trained collectively, enhancing the security of shared threat intelligence.

The Evolving Role of Human Experts

While AI is a powerful ally in the fight against cyber threats, human expertise remains irreplaceable:

- Contextual Understanding: Human experts bring context to cybersecurity. They can understand the unique nuances of an organization’s environment, making judgment calls that AI may struggle with.

- Adaptation and Innovation: Cyber adversaries continually evolve their tactics. Human cybersecurity professionals can adapt strategies and innovate responses, staying one step ahead.

- Ethical Decision-Making: Ethical considerations in cybersecurity often require human judgment. Decisions regarding privacy, compliance, and the ethical use of AI are guided by human values.

- Complex Investigations: In complex cyber incidents, human investigators are essential. They can piece together the puzzle, combining technical analysis with a broader understanding of the threat landscape.

In essence, the future of AI in cybersecurity is a collaboration between human expertise and artificial intelligence. AI enhances the capabilities of cybersecurity professionals, enabling them to work more efficiently and effectively.

Conclusion

The evolution of AI in cybersecurity, from rule-based systems to deep learning, has been a remarkable journey. It has equipped us with powerful tools to defend against an ever-evolving threat landscape. As AI continues to advance, we must remain vigilant, addressing challenges such as data privacy and bias, while also recognizing the crucial role that human experts play in keeping our digital world secure.

In this era of rapid technological change, the fusion of human intelligence and AI-driven automation will be the key to staying resilient in the face of cyber threats. As we move forward, the synergy between human expertise and AI innovation will be our strongest defense in the dynamic and complex world of cybersecurity.

SoluLab, a forward-thinking technology company, is renowned for innovative solutions across domains, including cybersecurity. They excel in AI development services and hire AI developers, contributing significantly to AI’s evolution in cybersecurity. Leveraging advanced AI and deep learning, they shape a proactive defense against evolving threats. SoluLab exemplifies how tech companies integrate AI to create adaptive, proactive, and robust defense mechanisms, shaping the future of cybersecurity and providing AI development services and AI developer hiring solutions for organizations.

FAQs

1. What is the primary difference between rule-based systems and deep learning in cybersecurity?

Rule-based systems rely on predefined rules and signatures to detect threats, while deep learning uses neural networks to analyze data and make decisions based on learned patterns. Deep learning is more adaptable to evolving threats.

2. How does AI in cybersecurity address ethical concerns, such as privacy and bias?

AI in cybersecurity must be implemented with strict privacy policies and data protection measures. To address bias, diverse and unbiased training data should be used, and models should be regularly audited for fairness.

3. Can AI completely replace human cybersecurity professionals?

No, AI complements human expertise but cannot replace it entirely. Human professionals bring contextual understanding, ethical decision-making, and adaptability that AI lacks. They play a vital role in complex investigations and decision-making.

4. What are some real-world examples of organisations successfully using AI for cybersecurity?

Organisations like Darktrace, Cylance, FireEye, and Google’s Chronicle have successfully implemented AI-driven cybersecurity solutions. These companies employ AI for threat detection, incident response, and real-time monitoring.

5. What are the key trends shaping the future of AI in cybersecurity?

The future of AI in cybersecurity is marked by trends such as AI-driven threat hunting, enhanced anomaly detection, natural language processing (NLP), automated incident response, AI in IoT security, zero-trust security models, and federated learning for threat intelligence sharing. These trends aim to bolster cyber defenses and adapt to evolving threats.