Llama 3, the third iteration of Meta’s huge language model, has just been released, offering a slew of new AI features to its social media platforms. To put that into perspective, the value of generative AI is predicted to reach $1.3 trillion by 2032. The Meta LLaMA 3 model is significant for large-scale, open-source LLMs in this regard.

In addition to boasting enhanced skills, the potent AI, which was trained on a sizable corpus of text and code, is also incorporated into Meta’s main social media platforms, Facebook, Instagram, and WhatsApp, as its latest AI assistant, “Meta AI.”

Meta LLaMA 3 is not just another large language model; it’s a powerful tool that combines extensive training data with sophisticated algorithms to deliver unprecedented performance. In this blog, we will walk you through the key features, applications, and benefits of Meta LLaMA 3, offering insights into how it can be leveraged across various industries.

What is Meta LLaMA 3?

The most recent big language model creation service developed by Meta is called LLaMA 3, or Big Language Model Meta AI 3. Its extensive training on text data has allowed it to have a very high level of linguistic comprehension.

Because of this, it excels in writing, translating between languages, and providing thoughtful responses to inquiries. The model will be accessible across several platforms, such as Microsoft Azure, Google Cloud, and AWS. The goal of the Meta LLaMA 3 model is to enable everyone to use sophisticated AI. With its release, Meta has become one of the world’s leading AI and ML development aids, pushing the boundaries of what these systems are capable of.

The model, which is an improvement on LLaMA 2, prioritizes innovation, scalability, and simplicity. These enhancements include the capacity to handle longer sequences of up to 8,192 tokens, an improved tokenizer for performance, and the use of grouped query attention (GQA) to generate inferences more quickly.

With the help of more than 15 trillion tokens of publicly available data, the LLaMA by Meta has undergone comprehensive and extended training. This material encompasses a wide range of topics, including code, historical details, and several languages.

With the combination of this large and varied training dataset and Meta’s improvements in pre-training and fine-tuning, LLaMA 3 has emerged as a very effective model. It performs admirably in both practical scenarios and a variety of industrial testing.

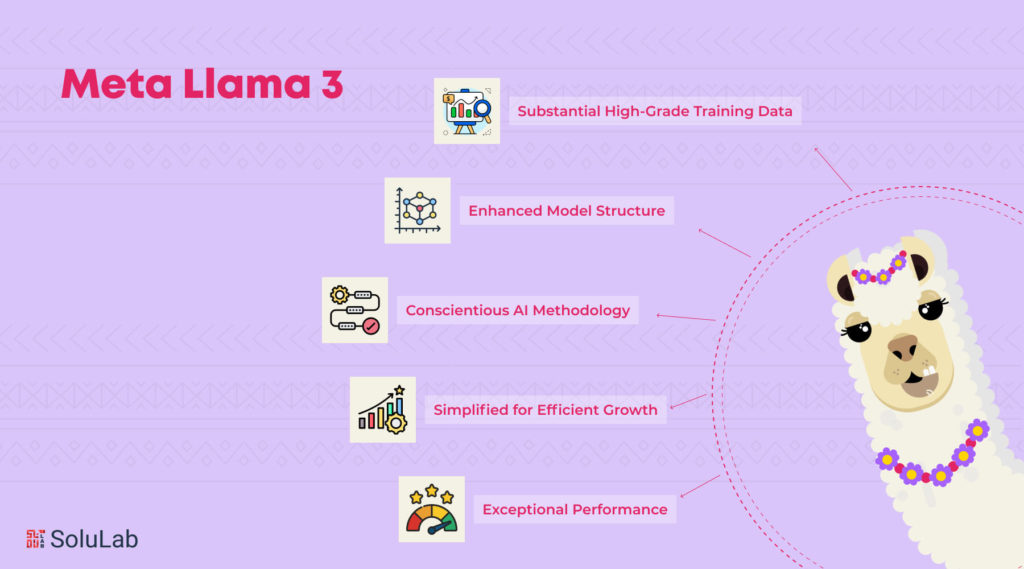

Key Characteristics of the LLaMA Model

Discover the innovative features that are transforming the field of large language models with the Meta AI model. Check out the capabilities and developments of LLaMA 3 below.

- With significant enhancements, LLaMA 3 maintains its decoder-only transformer architecture. Its tokenizer, which now handles 128,000 tokens and is far better at effectively encoding language, is one of the main improvements.

- When included in models with 8 billion and 70 billion parameters, this enhances the models’ information processing efficiency, resulting in more targeted and efficient processing.

- In several tests, LLaMA 3 outperforms its predecessors and rivals, particularly in tasks like MMLU and HumanEval, where it excels.

- LLaMA 3 goes through an enhanced post-training phase after training. To improve the model’s quality and decision-making capabilities, this step involves guided fine-tuning, rejection sampling, and policy optimization.

- Major systems support LLaMA 3, which has a tokenizer with enhanced efficiency and security features. Developers may now personalize apps and guarantee responsible AI deployment with the help of LLaMA Meta AI.

Capabilities of LLaMA 3

To compete with the top proprietary models currently on the market, Meta has created LLaMA 3, its most recent open AI model.

It was critical, according to Meta, to take developer comments into account in order to improve LLaMA 3’s overall effectiveness while emphasizing the appropriate usage and deployment of Large Language Models (LLMs).

In contrast to LLaMA 2, LLaMA 3 is superior in terms of reasoning skills, code production, and ability to follow orders from humans. It also outperforms other open models on ARC, DROP, and MMLU benchmarks, all because of LLaMA 3’s groundbreaking features.

-

Exceptional Performance

The new 8B and 70B parameter Meta LLaMA 3 models offer a major improvement over LLaMA 2, establishing a new benchmark for Large Language Models for organizations of this magnitude.

Such pre-trained and instruction-fine-tuned models are now the best performers at their particular scales, thanks to improvements in both the pre-and post-training phases.

The modifications in post-training techniques resulted in much lower false refusal rates, better alignment, and more diverse model responses. Furthermore, significant improvements in capabilities such as reasoning, code creation, and instruction execution make LLaMA 3 more versatile and effective. The team concentrated on evaluating model performance on both common benchmarks and real-world settings when developing the Meta LLaMA 3 model.

A new, high-quality human assessment set including 1,800 prompts addressing 12 important AI use cases was developed as part of this project. These include activities like seeking guidance, coming up with ideas, coding, writing creatively, thinking, and summarizing.

Even the modeling teams were prohibited from seeing this assessment set to maintain fairness and avoid unintentional bias. The Meta LLaMA 3 model was developed with a strong emphasis on innovation, flexibility, and simplicity. This overarching concept affected every facet of the project, but four crucial components received special attention: the architecture of the model, the pretraining data, the method of ramping up pretraining, and the instruction-based fine-tuning.

-

Enhanced Model Structure

Why is LLaMA important? The LLaMA 3 design team opted for a conventional decoder-only transformer architecture in accordance with their design ethos. In comparison to LLaMA 2, it incorporated a number of significant improvements.

Having a vocabulary of 128K tokens, the tokenizer in LLaMA 3 improves language decoding efficiency and, as a result, improves model performance. Furthermore, grouped query attention (GQA) was included for both the 8B and 70B sizes in order to improve the inference efficiency of Meta LLaMA 3 models. The training process of these models used 8,192 token sequences and a masking strategy that prevented self-attention from extending across document borders.

-

Substantial High-Grade Training Data

A substantial training dataset that has been carefully selected is necessary to ensure the best language model. Pretraining data investment was given top priority by the LLaMA 3 team, in keeping with their design objectives.

Pretraining was conducted on LLaMA 3 using a dataset of more than 15 trillion tokens, all of which were obtained from publically available sites. This dataset has a code content four times higher and is noticeably seven times larger than the one used for LLaMA 2.

High-quality non-English data covering more than 30 languages makes up more than 5% of the pretraining dataset for LLaMA 3, perhaps in preparation for future multilingual applications. It is known, therefore, that performance in these languages could not match that of English.

LLaMA 3 was trained on high-quality data thanks to the development of a series of data-filtering algorithms. To evaluate the quality of the data, these processes use text classifiers, NSFW filters, heuristic filters, and semantic deduplication techniques.

It’s interesting to note that earlier iterations of LLaMA showed competence in recognizing high-quality data. Consequently, training data for the text-quality classifications underlying LLaMA 3 were produced using LLaMA 2.

-

Conscientious AI Methodology

Meta Large Language Model has adopted a holistic strategy for responsible AI, giving developers the ability to manage how Meta LLaMA 3 models are used.

To emphasize the construction of safe and resilient models, this entails rigorous red-teaming, adversarial testing, and iterative fine-tuning of instructions.

Furthermore, new tools that assist responsible deployment include CyberSecEval 2, which evaluates code security, LLaMA Guard 2, which uses the MLCommons taxonomy, and Code Shield, which filters unsafe code written by others.

-

Simplified for Efficient Growth

In addition to improving the models directly, a great deal of work went into making LLaMA 3 as efficient as possible for widespread use.

Compared to LLaMA 2, a redesigned tokenizer increases token efficiency by up to 15%. The incorporation of GQA guarantees the preservation of inference parity between the 8B model and the prior 7B model.

All of the main cloud providers, model hosts, and other platforms will support LLaMA 3 models. There is also a large amount of open-source code accessible for activities like deployment, testing, and fine-tuning.

Detailed Comparison: LLaMA Meta vs ChatGPT

As artificial intelligence continues to advance, two prominent large language models, LLaMA by Meta and ChatGPT by OpenAI, have emerged as significant contributors to the field. While both models leverage sophisticated algorithms and extensive datasets, their design philosophies, capabilities, and ideal use cases differ. This comparison delves into the key differences between LLaMA and ChatGPT.

1. Development Background

- LLaMA: Developed by Meta (formerly Facebook), LLaMA is designed to be a versatile and adaptable AI model that can cater to a wide array of applications beyond mere conversational interactions. Meta aims to push the boundaries of what large language models can achieve across various domains.

- ChatGPT: Created by OpenAI, ChatGPT is specifically designed to excel in conversational AI, providing coherent and contextually relevant responses in dialogue-based interactions. OpenAI focuses on creating a model that enhances human-computer interaction through natural language conversations.

2. Design Philosophy

- LLaMA: LLaMA emphasizes flexibility and adaptability. It is built to handle a variety of tasks, from simple text generation to complex data analysis and problem-solving across different domains. Meta’s goal is to create a model that can integrate seamlessly into a wide range of applications.

- ChatGPT: ChatGPT is optimized for generating conversational text, focusing on maintaining the flow of dialogue and providing natural, engaging responses. Its design prioritizes ease of use in interactive applications like customer service and virtual assistants, ensuring high performance in conversational settings.

3. Training Methodology

- LLaMA: Trained on a diverse set of data sources, LLaMA’s training emphasizes broad applicability. It incorporates extensive context understanding to perform well across different types of tasks. Meta uses a variety of data, including academic papers, web texts, and proprietary datasets, to train LLaMA for comprehensive functionality.

- ChatGPT: Also trained on a diverse dataset, ChatGPT’s training, however, is particularly focused on dialogue data to ensure high performance in conversational settings. OpenAI fine-tunes ChatGPT on large-scale datasets that include internet text and conversational interactions to enhance its ability to understand and generate human-like dialogue.

4. Performance and Capabilities

- LLaMA: Known for its robust performance in generating detailed, context-rich information. LLaMA excels in applications requiring comprehensive understanding and analysis, making it suitable for tasks in research, healthcare, and financial services. Its ability to generate insightful and accurate content across various fields sets it apart.

- ChatGPT: Excels in generating natural and engaging conversational text. It is highly effective in customer support, virtual assistance, and any scenario requiring real-time, interactive dialogue. ChatGPT’s strength lies in its ability to maintain conversational context and provide relevant responses, making it ideal for interactive applications.

5. Customization and Scalability

- LLaMA: Designed with a high degree of customization in mind, LLaMA can be fine-tuned for specific industry needs. Its architecture supports scalability, allowing it to handle large-scale data and complex tasks effectively. Meta provides tools and frameworks to adapt LLaMA to various unique requirements.

- ChatGPT: While also customizable, ChatGPT is generally deployed in more standardized formats. It is easily scalable for widespread use in applications like chatbots and customer service where consistent conversational abilities are needed. OpenAI offers API access to integrate ChatGPT seamlessly into various platforms.

6. Integration and Deployment

- LLaMA: Often requires more specialized integration due to its broader range of capabilities. Its deployment may involve more complex configurations to adapt to specific industry requirements. Meta provides support for integrating LLaMA into diverse systems and workflows.

- ChatGPT: Easier to integrate into existing systems that require conversational AI. Its deployment is typically more straightforward, making it a go-to choice for businesses looking to implement AI-driven customer interaction solutions quickly. OpenAI’s API allows for easy integration into various applications with minimal configuration.

Both LLaMA and ChatGPT represent significant advancements in AI, each excelling in their respective areas. LLaMA offers versatility and depth, making it ideal for industries that need comprehensive data analysis and problem-solving capabilities. ChatGPT, with its conversational prowess, is perfect for applications requiring interactive and engaging user experiences. The choice between the two depends largely on the specific needs and goals of the application in question.

LLaMA 3 vs Gemini: Key Differences

LLaMA (Large Language Model Meta AI), developed by Meta, and Gemini, a language model by Google DeepMind, represent significant advancements in artificial intelligence, each with unique strengths and applications. LLaMA is designed with versatility and adaptability in mind, catering to a broad range of tasks from natural language processing to complex data analysis. Its architecture emphasizes flexibility, allowing it to be fine-tuned for various industry-specific applications such as healthcare, finance, and research. This adaptability makes LLaMA a powerful tool for generating detailed, context-rich information and performing comprehensive analyses.

On the other hand, Gemini by Google DeepMind focuses on combining powerful language understanding with advanced reasoning capabilities. Gemini is designed to excel in tasks that require deep comprehension and contextual awareness, such as intricate problem-solving and generating insightful responses. It leverages Google DeepMind’s extensive experience in AI and machine learning to integrate advanced reasoning and language understanding, making it highly effective in applications requiring critical thinking and detailed analysis.

In summary, while both LLaMA and Gemini are at the forefront of AI development, their core focuses differ. LLaMA emphasizes broad adaptability and versatility across various domains, making it ideal for a wide range of applications. Gemini, with its enhanced reasoning and deep comprehension, is particularly suited for tasks that require advanced problem-solving and contextual understanding. The choice between LLaMA and Gemini will depend on the specific needs of the application, whether it be broad adaptability or advanced reasoning capabilities.

Future Advancements for LLaMA 3

The LLaMA 3 8B and 70B models’ introduction marks the beginning of LLaMA’s future ambitions with many more enhancements planned.

There is a great deal of enthusiasm about the team’s success as they train models with more than 400 billion parameters.

Meta intends to deploy many models with better features in the future months, including multimodality, multilingual conversation capabilities, larger context windows, and greater overall capabilities.

Furthermore, when everything is finished, a thorough research article explaining the training of the Meta LLaMA 3 model will be released.

Hire LLM engineers while the largest LLM models are still undergoing training; they can provide a glimpse of their development through a few photographs.

It is crucial to remember that this data does not represent the capabilities of the presently published models; rather, it is generated from an early checkpoint of LLaMA 3. To release its models ethically, Meta is committed to supporting the continuous development and expansion of an open AI ecosystem. They genuinely feel that transparency encourages the creation of better, safer products, speeds up innovation, and creates a more positive market environment overall. This strategy is advantageous to Meta as well as to society at large.

With the Meta LLaMA 3 model, the community-centered approach is Meta’s top priority. These models are now available on several popular cloud, hosting, and hardware platforms, with more platforms to come.

Conclusion

LLaMA (Large Language Model Meta AI) represents a significant advancement in the field of artificial intelligence, offering unparalleled versatility and adaptability across a wide range of applications. From enhancing natural language processing and data analysis to driving innovations in healthcare, finance, and research, LLaMA’s capabilities make it a powerful tool for various industries. Its ability to generate detailed, context-rich information and perform comprehensive analyses sets it apart as a leading AI model. By understanding the core features and potential applications of LLaMA, businesses and researchers can leverage this technology to achieve remarkable results and drive forward their respective fields.

However, implementing LLaMA also presents several challenges, including data privacy concerns, the need for high-quality training data, and the complexity of integrating AI into existing systems. These hurdles can be daunting for organizations looking to adopt advanced AI solutions. This is where SoluLab, a leading AI development company, can provide invaluable support. With expertise in AI integration, data management, and customized solutions, SoluLab can help businesses navigate these challenges effectively. By partnering with SoluLab, organizations can utilize the full potential of LLaMA while ensuring compliance, security, and seamless integration. Contact SoluLab today to explore how our AI development services can empower your business with innovative technology.

FAQs

1: What is LLaMA and who developed it?

LLaMA (Large Language Model Meta AI) is an innovative artificial intelligence model developed by Meta (formerly Facebook). It is designed to handle a wide range of tasks, from natural language processing and text generation to complex data analysis, offering versatility and adaptability across various industries.

2: How does LLaMA differ from other AI language models?

LLaMA stands out due to its broad applicability and adaptability. Unlike other models that may focus primarily on conversational abilities or specific tasks, LLaMA is designed to excel in diverse applications, including research, healthcare, and finance. Its ability to generate detailed, context-rich information and perform comprehensive analyses makes it a versatile tool for various complex tasks.

3: What are the main applications of LLaMA?

LLaMA can be applied across numerous industries and use cases. In healthcare, it can assist in patient data analysis and medical research. In finance, it can aid in market analysis and predictions. Additionally, it is valuable for academic research, customer service automation, content creation, and any other domain requiring advanced natural language processing and data analysis capabilities.

4: What challenges might businesses face when implementing LLaMA?

Implementing LLaMA can present several challenges, including ensuring data privacy, obtaining high-quality training data, and integrating the AI model into existing systems. Additionally, maintaining compliance with regulatory requirements and securing the AI infrastructure are critical concerns that businesses need to address.

5: How can SoluLab help businesses overcome these challenges?

SoluLab, a leading AI development company, can help businesses navigate the challenges of implementing LLaMA. With expertise in AI integration, data management, and customized solutions, SoluLab ensures seamless integration of LLaMA into existing systems while maintaining data privacy and regulatory compliance. By partnering with SoluLab, businesses can effectively harness the power of LLaMA to drive innovation and achieve their goals. Contact SoluLab today to learn more about our AI development services.