Large language models and MLOps are combined in LLMOps, a special technique for effectively addressing the unique problems posed by massive language models. These models are capable of generating texts, translating texts, responding to queries, and calling for a completely different set of techniques and resources to be successfully applied in real-world scenarios.

Apart from this, it is important ot realize that LLMOps provide transparency in their services, whenever you engage with a model from Google or Open AI be it from an app or using a browser, you get referred to these models as the offering of service in this case. However, the burden of LLMOps has shifted to the objective of supplying models for particular use cases without depending on any external supplier.

This article will delve into the basic concepts of what is LLMOps, what are the main components involved in it, how beneficial it is for businesses and how is it changing the traditional ways.

What is LLMOps?

Large Language Model Operations also known as LLMOps is the field dedicated to overseeing the operational facets of LLMs. Large data sets which include codes and texts, were used to train these artificial intelligence AI systems or LLMs. Their applications range from text generation and language translation to content creation and creative production.

In the realm of LLMOps, successful deployment, ongoing observation, and efficient maintenance of LLMs across production systems are key concerns. To address these concerns certain decorum and methods must be developed to ensure that these powerful language models function as intended and yield precise outcomes in real-world applications.

The market of large language models is expected to grow rapidly with a compound annual rate of 33.2% CAGR expected to drive the industry straight from 6.4 USD Billion in 2024 to 36.1 USD billion in 2030.

The Rise of LLMOps

In 2018, early MMS like GPT were released. They have, however, gained popularity more lately, mainly as a result of the notable improvements in the capabilities of the later models, starting with GPT3 and going up. The remarkable model capabilities of LLMs have led to the emergence of numerous applications utilizing them. Chatbots for customer support, translation of language services, coding, and writing are a few examples.

The term what are large language model operations originated from the development of innovative tools and best practices for managing the LLM operations lifecycle in response to these difficulties. These technologies have made it possible to create apps that can generate and understand text at a comparable level to that of a human. In addition to having an impact on customer service, content development, and data analysis, this fundamental change has also created a need for prompt engineering to fully utilize LLMs.

Why Does LLMOps Matter?

When used as a service, LLM use cases are crucial for effectively managing these intricate models for several reasons. Here are the following reason what makes LLMOps important:

- LLMs have a large number of parameters in addition to handling a large volume of data. LLMOps guarantees that the storage and bandwidth of the infrastructure can accommodate these models.

- For users, getting a precise response in the shortest amount of time is essential. To preserve the flow of human-like interactions, the MLOps pipeline ensures that responses are sent in an acceptable amount of time.

- Under LLMOps, continuous monitoring goes beyond simply monitoring infrastructure faults or operational details. It also requires close monitoring of the models’ behavior to better understand their decision-making processes and enhance them in subsequent rounds.

- As LLM requires a lot of resources, running one can be quite expensive. These machine learning ops introduce cost-effective techniques to guarantee that these resources will be used for efficiency without jeopardizing performance.

LLMOps VS MLOps

While LLMOps and MLOps are quite similar, there are differences between LLMOps and MLOps in how AI products are constructed using traditional ML as opposed to LLMs. Here is the list of evident aspects that explain LLMOps v MLOps from within.

| ASPECTS | LLMOps | MLOps |

| Management of Data | For LLMOps data is crucial for an effective large language model. Fine-tuning requires a similar amount of data as MLOps. | Data preparation is the most important stage in MLOps for developing the ML model’s quality and performance. |

| Costing | The main cost component is the model inference during production which necessitates the deployment of pricey GPU-based computer examples. | The cost includes feature engineering, hyperparameter tweaking, computational resources, and preparing and collecting data. |

| Evaluation | Experts or workers recruited from the crowd are used in human evaluation to judge the output or effectiveness of an LLM in a particular situation. Insticric methods like ROUGE, BLEU, and BERT are used in LLMops. | Depending on the type of problem the performance of the model is assessed using the evaluation process including precision, accuracy, or mean squared error on a hold-out validation set. |

| Latency | The enormous sizes and complexity of LLMs as well as the substantial computation needed for generating texts and understanding latency issues are far more common in LLMOps. | Factors including computational complexity, model size, hardware restrictions, processing of data, and network latency might cause latency issues. |

| Experimentation | LLMs can learn from raw data easily but their goal is to use domain-specific datasets to enhance the model’s performance on certain tasks. | The process of developing a well-performing configuration entails conducting many experiments and comparing the outcomes to those of other experiments. |

How LLMOps Promote Monitoring?

Maintaining LLM correctness, relevance, and conformity with changing requirements requires ongoing monitoring and improvement after deployment. To promote this monitorization LLMOps work in such ways one can also build a Private LLM:

1. Performance Monitoring

Keep an eye on the model’s performance in real-world settings by watching important metrics and noticing any gradual decline.

2. Model Drift Detection

Maintaining a continuous watch for any alterations to the external contexts or trends in the input data that could reduce the efficacy of the model.

3. User Input

Compile and evaluate user input to pinpoint areas in need of development and learn more about actual performance this is the key to understanding consumer behavior.

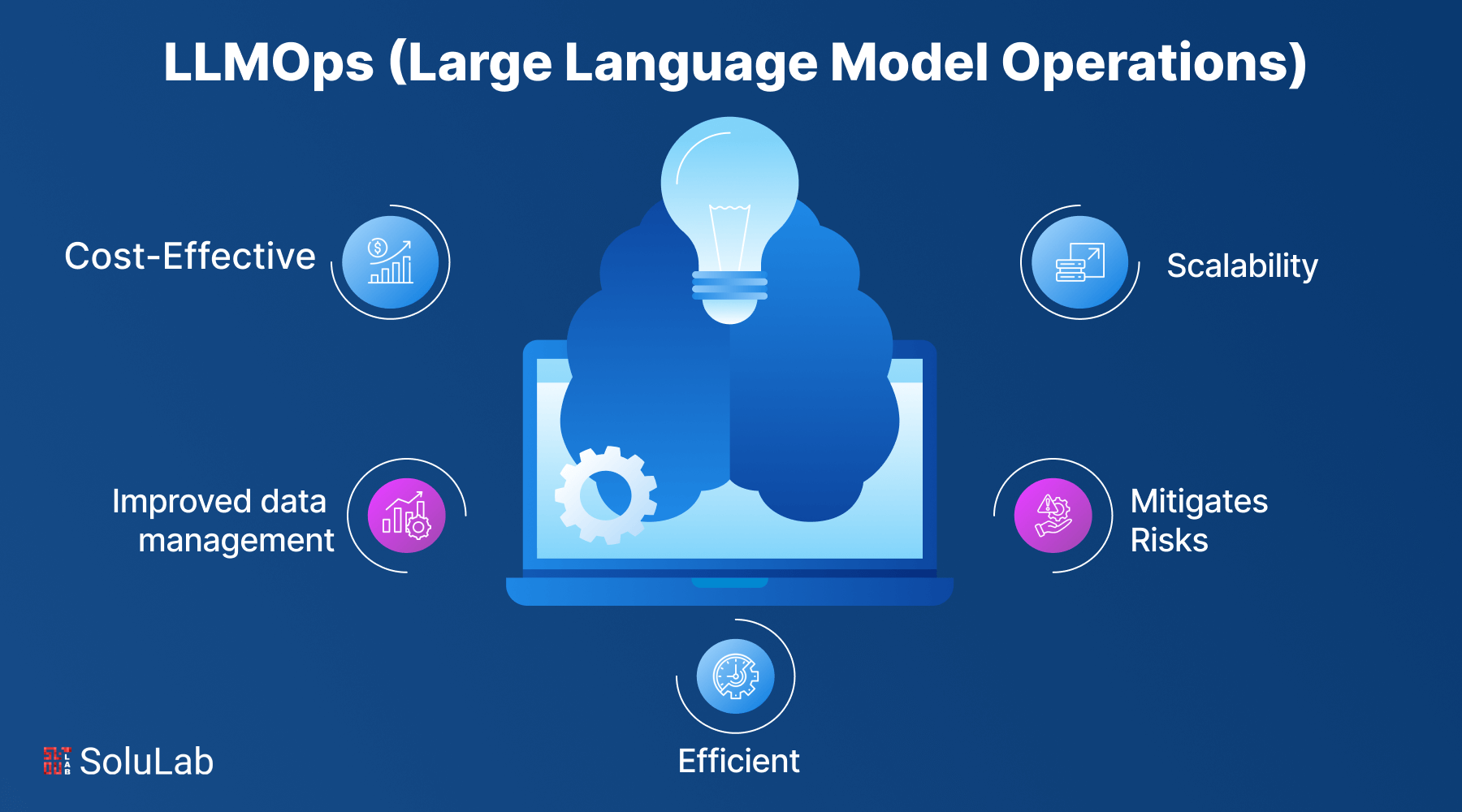

Top 5 Reasons Why You Should Choose LLMOps

The LLM development lifecycle’s experimentation, iteration, deployment, and continuous improvement are all included and provide users with the following benefits of LLMOps:

-

Cost-Effective

Making use of optimization techniques including model pruning, quantization, and the selection of an appropriate LLMOps architecture, LLMOps reduce needle computing expenditures.

-

Improved Data Management

To guarantee successful LLM training, robust data management procedures will guarantee high-quality, carefully sourced, and clean datasets. This assists with the number of models being supervised and monitored at once making scaling and management easier.

-

Efficiency

Data teams can design models and pipelines more quickly to produce models of greater quality and go live with the production more quickly thanks to llmops architecture. The whole cycle of an LLM from preparation of data to training the models is streamlined by LLMops.

-

Mitigation of Risk

Organizations can reduce the risks involved with implementing and running LLMs by using LLMOps. By putting in place strong monitoring systems, creating recovery plans from the disaster, and carrying out frequent security audits, LLMOps lower the risk of disruptions, data breaches, and outages.

-

Ability to Scale

A scalable and adaptable architecture for administering LLMs is offered by LLMOps, allowing businesses to quickly adjust to shifting needs and specifications. Several models may be controlled and tracked for regular integration and routine deployment.

Use Cases of LLMOps

LLMOps offers useful applications across a variety of use cases and industries. Businesses are using this strategy to improve product development, boost customer service, target marketing campaigns, and extract insights from data. Here are the best practices for LLMOps:

-

Continuous Integration and Delivery CI/CD

The purpose of CI/CD is to facilitate automation, acceleration, and facilitation of model building. It reduces the reliance on human intervention to accept new code, thus eliminating downtime and increasing the speed of code delivery.

-

Data Collection, Naming, and Storage

Data gathering comes from a variety of sources for accurate information. Data Storage is the gathering and storing of digital data linked with a network, while data labeling refers to the process of classification of data.

-

Inference, Monitoring, and Model Fine-tuning

Model fine-tuning maximizes models’ ability to carry out domain-specific tasks. Model inference can carry out activities based on the inferred information and manage production based on the knowledge that already exists. Model monitoring, which incorporates user input, gathers and stores the data about model behavior.

Major Components of LLMOps

In Small Language Models, the scope of machine learning projects can vary extensively. It could be as narrow as an organization requires or as wide-ranging, depending on the project. Some projects will cover everything from pipeline production, right up to data preparation, while others may just be implementing the model deployment procedure in LLMOps. Most organizations apply LLMOps principles in the following aspects,

- Exploring exploratory data (EDA)

- Preparation and Rapid engineering of Data

- Model Tuning

- Exploration and Model Governance

- Models and serving-based inference

- Model observation with human input

What Does the LLMOps Platform Mean?

Data scientists and software engineers can collaborate in an environment that supports iterative data exploration, real-time coworking for the tracking experiment, prompt engineering, and managing models and pipelines as well as controlling model transitioning, deployment, and monitoring for LLMs by using an LLMOps platform.

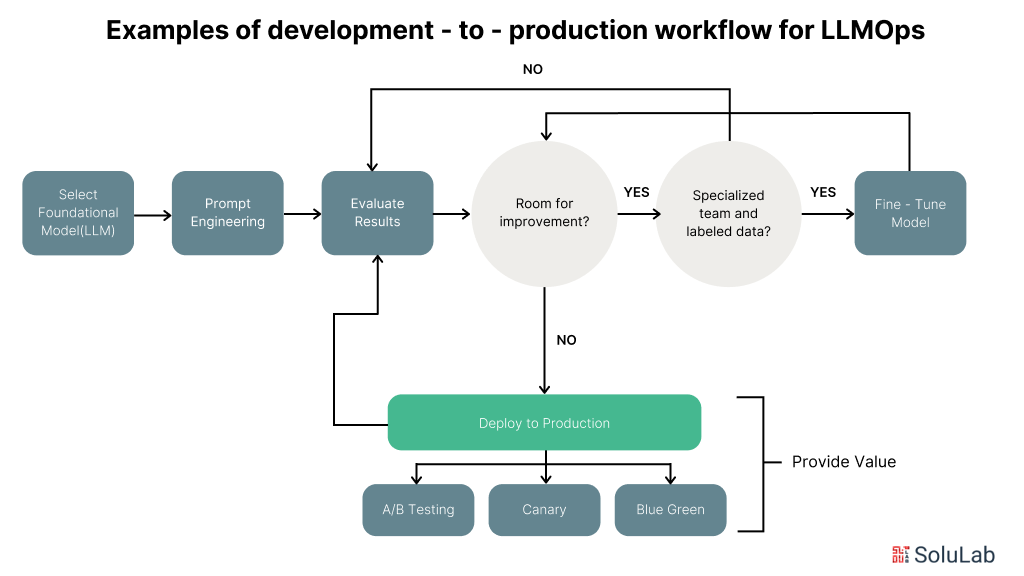

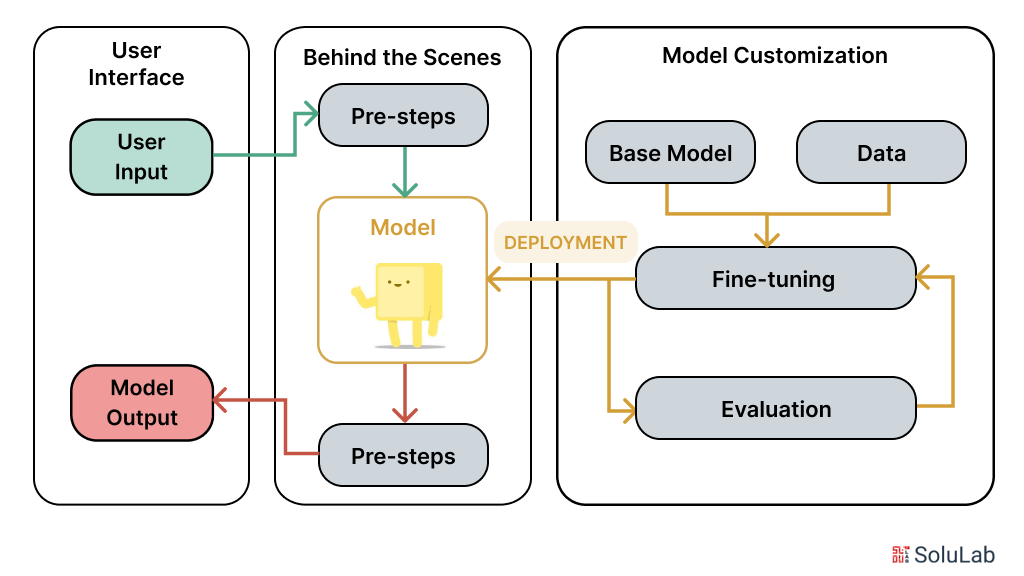

Steps Involved in LLMOps

The process for MLOps and LLMOps are similar. However, instead of training foundation models from scratch, pre-trained LLMs are further fine-tuned towards downstream tasks. In comparison to Large Language Models, foundational models change the process involved in developing an application based on LLMs. Some of the important parts of the LLMOps process include the following:

1. Selection of Foundational Models

Foundations including even pre-trained LLMs on enormous data sets can be used for most downstream tasks. Only a few teams have the opportunity to learn from the ground up because building a foundation model is something of a hard, expensive, and time-consuming effort. For example, Lambda Labs estimated it would take 355 years and $4.6 million in the Tesla V100 cloud instance to train OpenAI’s GPT-3 with 175 billion parameters. Teams can therefore decide to use open-source or proprietary foundation models by their preference on matters such as cost, ease of use, performance, and flexibility.

2. Downstream Task Adaptability

Once you have selected a foundation model, you can start using the LLM API. However, since LLM APIs do not always indicate which input leads to which result, they can sometimes be misleading. The API attempts to match your pattern for every text prompt and provides the completion of a given text. How do you achieve the desired output from a given LLM? Both accuracy in the model and hallucinations are important considerations. Without good data, hallucinations in LLMs can occur, and it can take a few attempts to get the LLM API output in the right form for you.

Teams can easily customize foundation models for downstream tasks like those and therefore solve those problems by quick engineering, optimizing existing learned models, Contextualizing knowledge with contextual data, Embeddings, and Model metrics

3. Model Deployment and Monitoring

Deployment version-to-version variability means programs relying on NLP applications should be careful not to miss changes in the API model. For that reason, monitoring tools for LLM like Whylabs and HumanLoop exist.

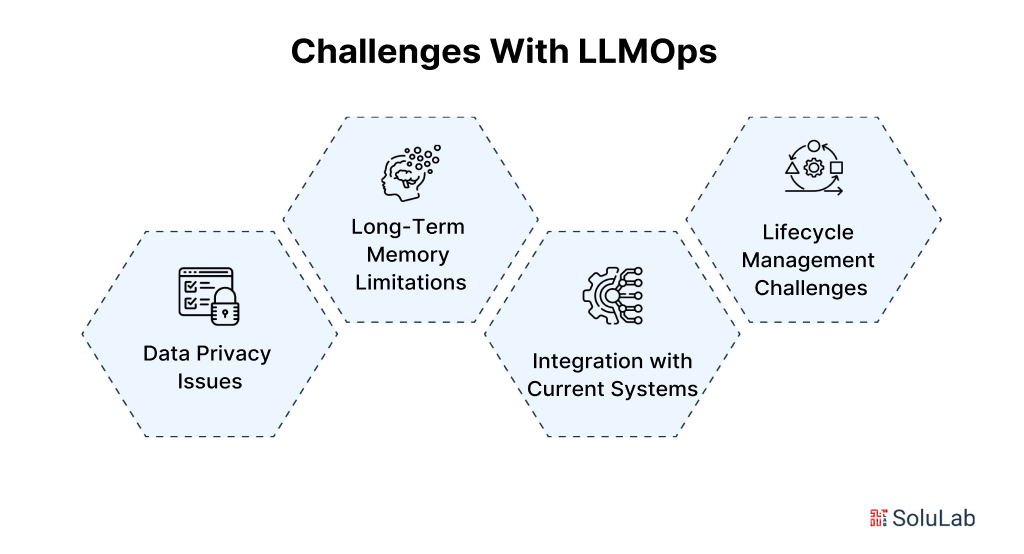

Challenges That Come With LLMOps

Large language model operations or LLMOps are by definition very complicated and quickly developing AI technology and solution form operations. It is also anticipated that it will run into difficulties and also find it difficult to get solutions as it is completely new to many organizations. Here are some challenges that you may face while implementing LLMOPs:

1. Data Privacy Issues

LLMs require large volumes of data which may be very sensitive to the user this raises concerns about data security and privacy for both individuals and corporations, while laws and technological solutions are always changing to meet these concerns, it might still be a problem for many.

2. Long-Term Memory Limitations

This is memory limitations-they do not remember much in terms of contextual, long-term information. Memory impairments can even make it hard to understand complex situations and even cause hallucinations. The solution is Memory Augmented Neural Networks or hierarchical prompt aids; they allow LLMs to remember and retain the most crucial information while working their way toward better accuracy and contextual relevance of their responses.

3. Integration with Current Systems

It is quite challenging to combine LLMs and LLMOps functions with the current software solutions since they are in many aspects, majorly complex. When integration is tried many of the systems have the potential to raise issues such as compatibility and interoperability.

4. Lifecycle Management Challenges

This development and growth of LLMs might be overwhelming to businesses in terms of control of these burgeoning and moving developments. The model has a high tendency to deviate from the intended functionality with these large systems. To detect and reduce model drift, there is a need for ongoing attention in addition to versioning, testing, and managing data changes.

How is SoluLab Implementing LLMOps for Efficiency and Scalability?

The generation and application of huge language models will be made easier by this new tool called LLMOps. Based on resilient monitoring systems, ways of resource management, and regular enhancement of the quality of provided services, SoluLab an LLM development company enhances the efficacy of operations and increases capabilities to address new needs of companies.

This approach not only minimizes the risks associated with the integration of LLM but also inspires creativity in different spheres, including big data and customer support services.

Yet, due to its specifically defined strategic direction regarding LLMOps, SoluLab is ready to become one of the leaders in the efficient and sustainable application of AI tools, which, at some point, would help the company and its clients reach better results.

LLMOps proposed by SoluLab can aid large language model research to evolve to the next level. high efficiency, scalability, and the minimization of risk are the main goals that are provided for this type of development. Contact us now to establish your company.

FAQs

1. What do you mean by LLMOps?

Large Language Model Operations or LLMOps are the processes and practices that make the management of data and operations involved in the large language models or LLMs.

2. How does LLMOps differ from MLOps?

The major difference coined between LLMOps and MLOps is the generation of costs. LLMOps costs are generated around inference, while on the other hand MLOps cost collection of data and training of the models.

3. What is the lifecycle of LLMOps?

The lifecycle in LLMOps comprises 5 stages which include training, development, deployment, monitoring, and finally maintenance. Every stage has properties of its own and is an important part of LLMOps solutions.

4. What are the stages in LLM Development?

The three major stages involved in the development of LLMs are self-trained learning, supervised learning, and reinforcement learning. These stages altogether make LLMs what they are for you today in any field.

5. Can SoluLab run LLMOps operations for a business?

SoluLab can easily run LLMOps with the help of Natural Language Processing (NLP) operations for businesses in any field by domain-specific units leveraging its services for managing the lifecycle of large language models from data preparation to monitoring.