Businesses and developers constantly seek smarter ways to build more accurate and efficient language models. Two popular approaches often come up in this conversation: Retrieval-Augmented Generation (RAG) and fine-tuning large language models (LLMs).

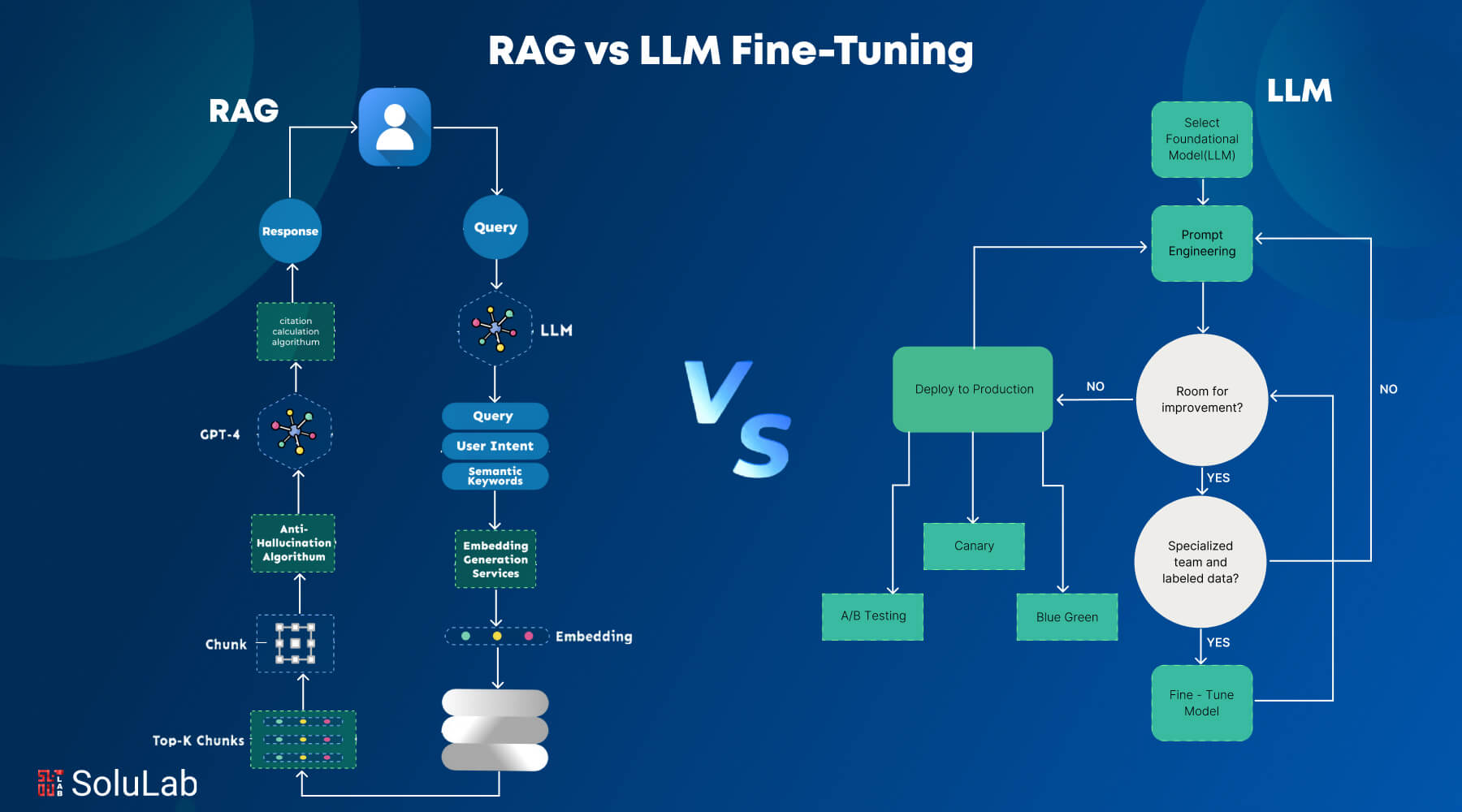

While both methods aim to improve a model’s output, they take very different paths to get there. RAG enhances responses by pulling in real-time data from external sources, while fine-tuning reshapes a model’s behavior by training it on specific datasets.

But which one should you choose? That depends on your use case, budget, and how often your data changes. In this blog, we’ll break down the core differences between RAG and fine-tuning and help you understand which method suits your needs best.

What is Retrieval-Augmented Generation?

Retrieval-Augmented Generation (RAG) is a framework introduced by Meta in 2020, designed to enhance large language models (LLMs) by connecting them to a curated, dynamic database. This connection allows the LLM to generate responses enriched with up-to-date and reliable information, improving its accuracy and contextual reasoning.

Key Components of RAG Development

Building a RAG architecture is a multifaceted process that involves integrating various tools and techniques. These include prompt engineering, vector databases like Pinecone, embedding vectors, semantic layers, data modeling, and orchestrating data pipelines. Each element is customized to suit the requirements of the RAG system.

Here are some key components of RAG (Retrieval-Augmented Generation) development, explained simply:

1. Retriever: This component searches a knowledge base (like documents or databases) to find the most relevant information based on the user’s query. It’s like the AI’s “research assistant.”

2. Knowledge Base / Vector Store: A structured collection of documents or data chunks, stored in a format that allows fast and accurate search, usually via embeddings in a vector database (e.g., Pinecone, FAISS).

3. Embedding Model: Converts user queries and documents into vector formats (numeric form) to be compared for relevance. Popular models include OpenAI’s or Sentence Transformers.

4. Generator (LLM): The large language model (like GPT-4) takes the retrieved documents and generates a human-like response, ensuring the answer is contextually relevant and grounded in the retrieved info.

5. Orchestration Layer: Coordinates the entire pipeline—from query input to retrieval to generation. Tools like LangChain or LlamaIndex help developers streamline this flow efficiently.

How Does RAG Work?

1. Query Processing: The RAG workflow begins when a user submits a query. This query serves as the starting point for the system’s retrieval mechanism.

2. Data Retrieval: Based on the input query, the system searches its database for relevant information. This step utilizes sophisticated algorithms to identify and retrieve the most appropriate and contextually aligned data.

3. Integration with the LLM: The retrieved information is combined with the user’s query and provided as input to the LLM, creating a context-rich foundation for response generation.

4. Response Generation: The LLM, empowered by the contextual data and the original query, generates a response that is both accurate and tailored to the specific needs of the query.

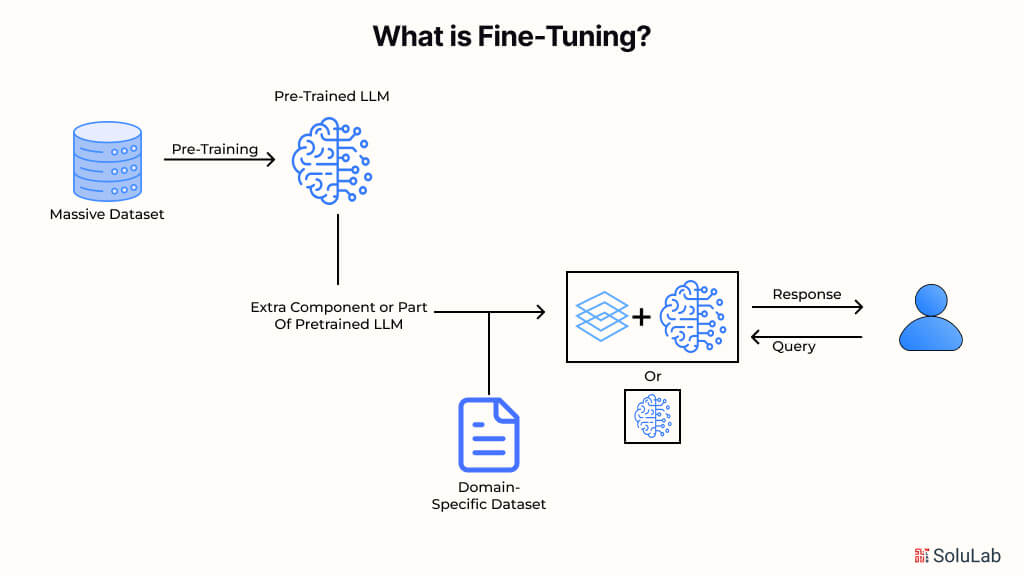

What is Fine-Tuning?

Fine-tuning offers an alternative method for developing generative AI by focusing on training a large language model (LLM) with a smaller, specialized, and labeled dataset. This process involves modifying the model’s parameters and embeddings to adapt it to new data.

When it comes to enterprise-ready AI solutions, both Retrieval-Augmented Generation (RAG) and fine-tuning aim for the same objective: maximizing the business value derived from AI models. However, unlike RAG, which enhances an LLM by granting access to a proprietary database, fine-tuning takes a more in-depth approach by customizing the model itself for a specific domain.

The fine-tuning process focuses on training the LLM using a niche, labeled dataset that reflects the nuances and terminologies unique to a particular field. By doing so, fine-tuning enables the model to perform specialized tasks more effectively, making it highly suited for domain-specific applications.

Types of Fine-Tuning for LLMs

Fine-tuning large language models (LLMs) isn’t one-size-fits-all—there are several approaches, each tailored to different goals, data sizes, and resource constraints. Here are some types of fine tuning for LLMs:

1. Supervised Adjustment

Using a task-specific dataset with labeled input-output pairs, supervised fine-tuning includes further training of a previously trained model. Through this process, the model can learn how to use the provided dataset to map inputs to outputs.

How it works:

- Make use of a trained model.

- As the model requires, create a dataset with input-output pairings.

- During fine-tuning, update the pre-trained weights to help the model adjust to the new task.

When labeled datasets are available, supervised fine-tuning is perfect for applications like named entity recognition, text classification, and sentiment analysis.

2. Instructional Adjustment

In the prompt template, instruction fine-tuning adds extensive guidance to input-output examples. This improves the model’s ability to generalize to new tasks, particularly ones that require instructions in plain language.

How it works:

- Make use of a trained model.

- Get a dataset of instruction-response pairs ready.

- Like neural network training, train the model using the instruction fine-tuning procedure.

Building chatbots, question-answering systems, and other activities requiring natural language interaction frequently use instruction fine-tuning.

3. PEFT, or parameter-efficient fine-tuning

A complete model requires a lot of resources to train. By altering only a portion of the model’s parameters, PEFT techniques lower the amount of memory needed for training, allowing for the efficient use of both memory and compute.

PEFT Techniques:

- Selective Method: Only fine-tune a few of the model’s layers while freezing the majority of them.

- LoRA, or the Reparameterization Method: Model weights can be reparameterized by adding tiny, trainable parameters and freezing the previous weights using low-rank matrices.

For instance, 32,768 parameters would be needed for complete fine-tuning if a model had dimensions of 512 by 64. The number of parameters can be lowered to 4,608 with LoRA.

- Additive Method: The additive method involves training additional layers on the encoder or decoder side of the model for the given job.

- Soft Prompting: Keep other tokens and weights frozen and train only the newly introduced tokens to the model prompt.

PEFT lowers training costs and resource needs, which is helpful when working with huge models that exceed memory restrictions.

4. Human Feedback Reinforcement Learning (RLHF)

RLHF uses reinforced learning to match the output of a refined model to human preferences. After the initial fine-tuning stage, this strategy improves the behavior of the model.

How it works:

- Prepare Dataset: Create prompt-completion pairs and rank them according to the alignment standards used by human assessors to prepare the dataset.

- Train Reward Model: Create a reward model that uses human feedback to provide completion scores.

- Revise the Model: Update the model weights based on the reward model using reinforcement learning, usually the PPO algorithm.

For applications requiring human-like outputs, including producing language that complies with ethical standards or user expectations, RLHF is perfect.

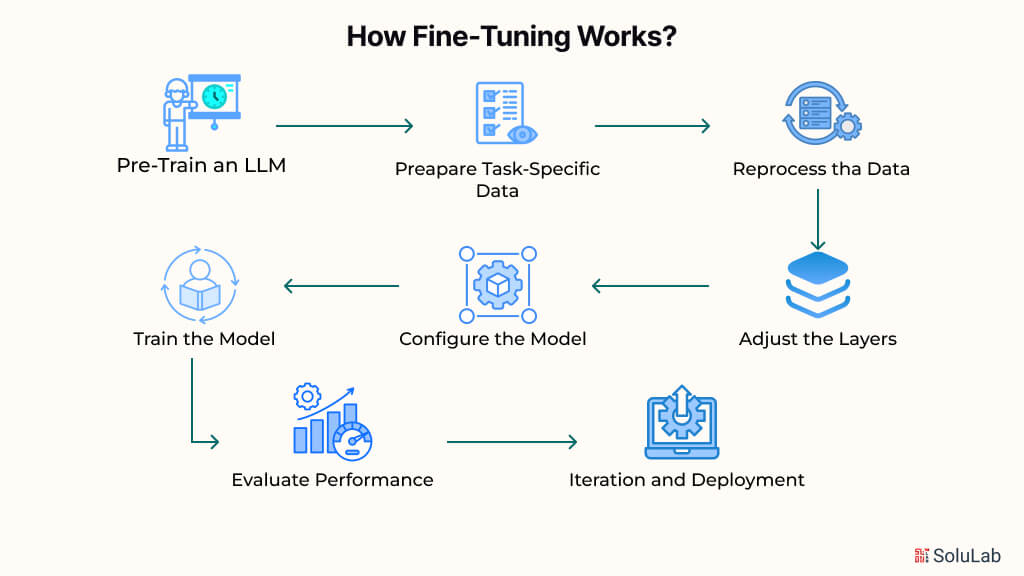

How Fine-Tuning Works?

Fine-tuning is a critical step for customizing large language models (LLMs) to perform specific tasks. Here’s a detailed explanation of the process, emphasizing fine-tuning RAG for beginners.

1. Pre-Train an LLM

Fine-tuning begins with a pre-trained large language model. Pre-training involves collecting massive amounts of text and code to develop a general-purpose LLM. This foundational model learns basic language patterns and relationships, enabling it to perform generic tasks. However, for domain-specific applications, additional fine-tuning is necessary to enhance its performance.

2. Prepare Task-Specific Data

Gather a smaller, labeled dataset relevant to your target task. This dataset serves as the basis for training the model to handle specific input-output relationships. Once collected, the data is divided into training, validation, and test sets to ensure effective training and accurate performance evaluation.

3. Reprocess the Data

The success of fine-tuning RAG for beginners depends on the quality of the task-specific data. Start by converting the dataset into a format the LLM can process. Clean the data by correcting errors, removing duplicates, and addressing outliers to ensure the model learns from accurate and structured information.

4. Adjust the Layers

Pre-trained LLMs consist of multiple layers, each processing different aspects of input data. During fine-tuning, only the top or later layers are updated to adapt the model to the task-specific dataset. The remaining layers, which store general knowledge, remain unchanged to retain foundational language understanding.

5. Configure the Model

Set the parameters for fine-tuning, including learning rate, batch size, regularization techniques, and the number of epochs. Proper configuration of these hyperparameters ensures efficient training and optimal model adaptation for the desired task.

6. Train the Model

Input the cleaned, task-specific data into the pre-trained LLM and begin training. A backpropagation algorithm is used to adjust the fine-tuned layers, refining the model’s outputs by minimizing errors. Since the base model is pre-trained, fine-tuning typically requires fewer epochs compared to training from scratch. Monitor performance on the validation set to prevent overfitting and make adjustments when necessary.

7. Evaluate Performance

Once the model is trained, test its performance using an unseen dataset to verify its ability to generalize to new data. Use metrics like BLEU scores, ROUGE scores, or human evaluations to assess the model’s accuracy and effectiveness in performing the desired task.

8. Iterate and Deploy

Based on the evaluation results, revisit the earlier steps to refine and improve the model. Repeat the process until the model achieves satisfactory performance. Once ready, deploy the fine-tuned LLM in applications where it can effectively perform the specified tasks.

By following these steps, those new to fine-tuning RAG can effectively adapt LLMs for specialized tasks, ensuring high performance and practical application.

Differences Between RAG and LLM Fine-Tuning

The table below highlights the key distinctions between LLM RAG vs Fine-Tuning to help understand when to choose each approach. Both methods serve the purpose of enhancing large language models (LLMs), but their methodologies and applications differ significantly.

| Aspect | Retrieval-Augmented Generation (RAG) | Fine-Tuning |

| Definition | RAG combines a pre-trained LLM with an external database, retrieving relevant information in real-time to augment the model’s responses. | Fine-tuning involves retraining an LLM using a labeled dataset to adjust the model’s parameters for specific tasks. |

| Objective | Provides accurate and contextually updated responses by grounding answers in real-time data. | Customizes the LLM itself to improve performance on a specific task or domain. |

| Data Dependency | Relies on a curated and dynamically updated external database for retrieving relevant information. | Requires a task-specific labeled dataset for training and validation. |

| Training Effort | Requires minimal training as the generative model remains unchanged; and focuses on retrieval optimization. | Requires significant computational resources for fine-tuning the pre-trained model on labeled data. |

| Model Adaptation | The model adapts dynamically by retrieving relevant external information. | The model is static after fine-tuning, tailored for specific tasks or domains. |

| Knowledge Update | Easier to update by simply modifying or adding to the external knowledge base. | Requires retraining or additional fine-tuning to incorporate new information. |

| Inference Cost | Higher during inference due to the retrieval process. | Lower inference cost as the fine-tuned model operates independently. |

| Examples | GPT-3 or ChatGPT integrated with vector databases (e.g., Pinecone, Elasticsearch). | Fine-tuning GPT-3 on legal documents for contract review or fine-tuning for specific APIs. |

| Customization Level | Limited to retrieval mechanisms and external knowledge adjustments. | Deep customization is possible through parameter updates for specific tasks. |

| Maintenance | Easier to maintain as updates are primarily to the knowledge base. | Requires ongoing fine-tuning for new tasks or updated knowledge. |

How to Decide Between Fine-Tuning vs RAG?

Choosing between LLM RAG (Retrieval-Augmented Generation) and fine-tuning depends on your specific use case and the resources at your disposal. While RAG is often the go-to choice for many scenarios, it’s important to note that RAG and fine-tuning are not mutually exclusive. Both approaches can complement each other, especially when resources are available to maximize their combined benefits.

-

Factors to Consider

Although fine-tuning offers deep customization, it comes with challenges such as high computational costs, time-intensive processes, and the need for labeled data. On the other hand, RAG, while less resource-heavy for training, involves complexity in building and managing effective retrieval systems.

-

Utilizing Both RAG and Fine-Tuning

When resources allow, combining both methods can be highly effective. Fine-tuning the model to understand a highly specific context while using RAG to retrieve the most relevant data from a targeted knowledge base can create powerful AI solutions. Evaluate your LLM fine-tuning vs RAG needs carefully and aim to maximize value for your stakeholders by focusing on the approach that aligns best with your goals.

-

The Role of Data Quality in AI Development

Whether you choose fine-tuning or RAG, both rely heavily on robust data pipelines. These pipelines must deliver accurate and reliable company data via a trusted data store to ensure the effectiveness of your AI application.

-

Ensuring Data Reliability with Observability

For either RAG or fine-tuning to succeed, the underlying data must be trustworthy. Implementing data observability—a scalable, automated solution for monitoring and improving data reliability—is essential. Observability helps detect issues, identify their root causes, and resolve them quickly, preventing negative impacts on the LLMs dependent on this data.

By prioritizing high-quality data and aligning your decision with stakeholder needs, you can make an informed choice between LLM RAG vs fine-tuning and even leverage the strengths of both.

Final Words

Retrieval-Augmented Generation (RAG) and LLM fine-tuning offer powerful ways to enhance AI performance, but they serve different purposes. RAG is ideal when you need real-time, up-to-date, or domain-specific information without altering the model itself.

However, fine-tuning customizes the model to perform better on specific tasks by training it on curated data. If you need flexibility and fresh knowledge, go with RAG. If you’re looking for deep customization and long-term improvements, fine-tuning is your path. The right choice depends on your specific use case, budget, and how often your content or data changes.

SoluLab helped InfuseNet, an AI platform enabling businesses to import and integrate data from texts, images, documents, and APIs to build intelligent, personalized applications. Its drag-and-drop interface connects advanced models like GPT-4 and GPT-NeoX, improving the creation of ChatGPT-like apps using private data while ensuring security and efficiency. With support for diverse services like MySQL, Google Cloud, and CRMs, InfuseNet empowers data-driven innovation for enhanced productivity and decision-making.

SoluLab, an AI development company can help you implement RAG-based models for dynamic information retrieval to fine-tune LLMs for niche applications.

FAQs

1. What is the difference between RAG and fine-tuning in AI development?

RAG (Retrieval-Augmented Generation) combines a generative language model with external data retrieval, providing up-to-date and domain-specific information. Fine-tuning involves training a pre-trained language model on a custom dataset to optimize its performance for specific tasks. RAG is ideal for dynamic data needs while fine-tuning excels in specialized applications.

2. Can RAG and fine-tuning be used together?

Yes, RAG and fine-tuning can complement each other. For example, you can fine-tune a model for a specific task and use RAG to retrieve additional relevant information dynamically, ensuring both accuracy and relevance in your AI application.

3. Which approach is more cost-effective: RAG or fine-tuning?

RAG is generally more cost-effective since it doesn’t require modifying the model but focuses on optimizing the retrieval system. Fine-tuning, on the other hand, can be resource-intensive due to the need for labeled data, computing power, and retraining.

4. How does data quality impact the success of RAG or fine-tuning?

Both RAG and fine-tuning rely on high-quality, reliable data. In RAG, the retrieval system depends on a well-curated knowledge base, while fine-tuning requires accurately labeled datasets. Poor data quality can result in inaccurate outputs and reduced model performance.

5. How can SoluLab help with RAG or fine-tuning projects?

SoluLab provides end-to-end LLM development solutions, specializing in both RAG and fine-tuning approaches. Our team ensures seamless integration, secure data handling, and scalable solutions tailored to your business needs. Contact us to explore how we can elevate your AI projects.